Taking over a project that another group started is somewhat like trying to bathe a cat: there is a lot of blood and screaming involved. This was very much the case in the work that Team Teams has done on the project that Team cycuc created, though fortunately without the blood. We began our additions to hale-aloha-cli-cycuc soon after writing our technical review of the project. While we did discuss whether or not a developer could “successfully understand and enhance the system,” all of that was based on examining standards and documentation and tools, not actually attempting to work on the project ourselves. Without doing so, it is very difficult for us to give an accurate answer as to how easily development would progress. Thus, this development is simply a continuation of our review of Team cycuc.

Team Teams is still comprised of the same members: Jayson Gamiao, Jason Yeo, and me. Team Teams has implemented some additional commands into Hale Aloha CLI, which are as follows:

set-baseline: stores baseline values of power consumption for a given source over the course of a given day. These values are then used for the next command,

monitor-goal: uses the baselines from set-baseline in conjunction with the current power consumption to determine if the given source has met a given power reduction goal. The current time, the current power consumption value, and whether or not the source met the power reduction goal are printed at given time intervals until the system receives a carriage return.

monitor-power: prints out the current power consumption of a given source at given time intervals until the system receives a carriage return.

These commands expand the capabilities of Hale Aloha CLI in a few ways. First, none of the prior commands truly depended on stored data. While data was saved before—the energy consumption of the towers for rank-towers, for example—it was always within a particular command, and once the command was complete that data was lost. In contrast, the set-baseline command explicitly stores data for later retrieval, and the monitor-goal command requires access to that stored data.

Another addition, related to the first, is the need for communication between different commands. The set-baseline must store data in a manner allowing monitor-goal to access the data.

The most complex of these new features though is the monitoring aspect. Both monitor-goal and monitor-power must wait until the user provides some input. In this, Team Teams did not quite achieve our objectives: though the original specifications were to end the loop upon any keystroke from the user, the actual system only checks for carriage returns. Another deficit in the system is that the data printed to the screen is not necessarily real-time: the power values are the latest ones obtained from the server, and thus are not necessarily at the intervals that the user provides. In some cases, output on these monitoring commands will "stutter," producing pairs of output with the same timestamp and values.

Team Teams generally worked on this in the same manner as we had on hale-aloha-cli-teams. Work was distributed in much the same way; we all generally had the same responsibilities as on our original project. As our coding standards and tools were the same as those that Team cycuc used, those naturally remained the same as well.

However, we were also much busier while working on this project due to other commitments. Thus, rather than consistent updates throughout the project timeline one will see several spikes in activity when we had time to work on the project. Unfortunately, this also led to us having to rush to finish the project on time and as a result most of our commits were on the day before our deadline. We were certainly working on the project consistently, but this did not always translate to commits.

We also tended to ask each other for assistance more often than we did on the original project. This might have been due to increasing familiarity with one another, particularly since we all suddenly had at least one thing in common: a distinct hatred for the way that hale-aloha-cli-cycuc was structured. On the other hand, the unknown structure of the system made some overlap in our areas of responsibility inevitable. For example, while I handled the set-baseline and monitor-goal commands, I had to use the HaleAlohaClientUI class, nominally the responsibility of Jayson, to transfer data from one to the other. In turn, while Jayson normally handled input and output, hale-aloha-cli-cycuc has the output in the Command implementations, so Jayson had to go into my command classes to work on the output. Regardless of our rationales, we did work together a lot more and cover for one another. As a result, while the Issues page for hale-aloha-cli-cycuc may give a general idea of who did what, it vastly underestimates the amount of work that each of us completed. I know that I wrote a little bit of almost every file that we added or modified for this project, and I am fairly certain that the rest of my group members did the same.

Overall, the quality of our work on hale-aloha-cli-cycuc is not too bad. Obviously, the code is nowhere near as good as it would have been had we continued to work on our original code base. However, the work that we have done certainly does perform as expected, and we were able to resolve at least some of the issues that we brought up in our technical review.

Going back to the Three Prime Directives that we used in our technical review:

Review Question 1: Does the system accomplish a useful task?

Yes, the system does accomplish what it should. All of the commands are implemented and run properly.

Review Question 2: Can an external user successfully install and use the system?

Yes, a user can easily download the system from the Downloads section and use the system with the .jar file available in the root directory. The UserGuide page in the wiki on the project site now has instructions on the procedure for installation and running the .jar file. The project site remains mostly unchanged and thus provides the same information as before. Unfortunately, Team Teams was only provided committer status, and while that has generally been enough it does have its limitations. One such limitation is the inability to change much of the project site.

Output is now slightly more organized than before. One of the complaints that we had before concerned the units for the output values, which were incorrect or not present. These have been standardized as kilowatts for power and kilowatt-hours for energy.

Review Question 3: Can an external developer successfully understand and enhance the system?

The DeveloperGuide page on the project wiki remains unchanged, and thus continues to provide a rudimentary explanation of how developers can build and develop the system.

Each class and method has a description in JavaDocs. However, Team Teams did not change any of the JavaDocs descriptions that were already in the code, save to add descriptions where none existed; as a result, some of the descriptions may not be ideal.

Building the system is difficult to accomplish due to timeouts; this may be due to my individual machine though. The system builds perfectly fine on Jenkins.

Jacoco coverage remains universally horrible. Again, this is due in part to timeouts. I admit though that the Baseline class, which I created, does not have a test for anything; it is only tested indirectly through its use in the set-baseline and monitor-goal commands. This does indicate though that about half of the methods in the Baseline class are unnecessary, since they are never called in a practical situation.

Testing as a whole remains a bit weak. Team Teams was unable to effectively test the code due to the structure of the hale-aloha-cli-cycuc project. The Command interface has a void method printResults. Since printResults does not return anything, there is no return value to test. Though it is possible to get around this through storing the most recent value as a field and creating a getter method for that field, this is not very efficient.

Most of the coding standards violations mentioned in the technical review have been resolved. Those that remain generally have a comment nearby explaining why they were not removed, usually due to some ambiguity or uncertainty as to whether or not it actually qualifies as a violation.

As mentioned earlier, while looking at the Issues page on the project site gives a general idea of what we worked on, all the members of Team Teams worked on just about everything in the project. Therefore, the Issues page does not give a clear picture of who was responsible for what. The number of issues per team member is fairly useless as a measure of determining if work distribution was equal; if I personally had many issues, it is because most of them were small and were sometimes typed up as I was completing the solution in question. (Of course, one might argue that working on small issues is an essential component of Issue Driven Project Management.)

The Jenkins page shows that most build failures were resolved fairly quickly. The exception is on 9 December 2011, when the system remained in a failed state for some time. This was detailed in Issue 36. Annoyingly, the solution to this had been known for some weeks, yet Team cycuc had not implemented the solution themselves.

A quick scan over the Updates page of the project site indicates that 28 of the 38 commits since Team Teams took over hale-aloha-cli-cycuc were associated with a particular issue. This means that about 74% of the updates were associated with an issue. This is an increase of a whole 3% from our work on hale-aloha-cli-teams, according to one of the technical reviews of our original project. Clearly, Team Teams is making great strides towards improvement in remembering to list the issues that they are making commits for. As mentioned above, updates were more sporadic and tended to be grouped together; nonetheless, work on the project was fairly consistent.

The most difficult part of working on hale-aloha-cli-cycuc was trying to understand a nearly foreign manner of doing things. In the technical review of hale-aloha-cli-cycuc, I stated that having implemented the project ourselves might have made it easier to understand what Team cycuc was doing. However, when actually working on the project, this almost had an inverse effect of that expected. Because we were so familiar with our project and our way of doing things, it was difficult to adjust to the new project. The system would be just similar enough for us to think that we knew how to create a solution, and then of course we would find out that the solution did not work at all, forcing us to learn about most of the code before we could accomplish anything. I do not believe that the continued development of hale-aloha-cli-cycuc under different management from the original owners would have been possible had my group members not been as dedicated and resourceful as they are, and I am honored to have contributed in a small way to their efforts.

In many ways, it is tempting to blame the difficulties that we had on the way that Team cycuc structured their system. It is difficult to tell where “different from how I would do it” becomes “who in their right mind would design something like this,” and admittedly one tends to think the latter more often when under pressure. We knew going into this that developing hale-aloha-cli cycuc would not be an easy task, having found several areas for improvement in our technical review, yet actually working on the project was much harder than I at least had anticipated. It certainly seems more productive to continue working on the same project rather than constantly moving to work on code that someone else has written; I am certain that we could have implemented the three commands within a week and a half had we remained with hale-aloha-cli-teams. However, this process of taking over projects from other developers, or even just cooperating with other developers, is undoubtedly what we will have to do as software engineers, and this exercise has certainly given us of Team Teams a lot of practice in that skill. It has also confirmed that all development teams should have a trained psychiatrist available, but given that I thought so before this project it is doubtful if that qualifies as a lesson learned from the experience.

Wednesday, December 14, 2011

Friday, December 2, 2011

Hale Aloha CLI Technical Review - Team cycuc

Some months ago, I wrote about eclipse-cs. To evaluate the plug-in, I referred to the Three Prime Directives, which serve as useful heuristics to examine the usefulness of a system. In theory, we as software engineers should always strive to keep these directives in mind when developing software. In practice of course, it is all too easy to become wrapped up in the excitement of programming and forget that we are creating this system for the users and future developers. Fortunately, I have a very skilled team of developers with a project that we have just finished work on, and perhaps even more fortunately there are two other teams of developers also working on a similar project. To ensure that we are all following the Three Prime Directives, we have conducted a technical review of the work that Team cycuc has done; in turn, Team cycuc has evaluated Team pichu, and Team pichu has reviewed the work of my group.

The Three Prime Directives are as follows:

1: The system successfully accomplishes a useful task.

2: An external user can successfully install and use the system.

3: An external developer can successfully understand and enhance the system.

At least intuitively, this seems to provide a good framework for evaluating the system. The Three Prime Directives, if followed, ensure that the system actually does something and account for everyone who could potentially have reason to interact with the system.

The system under evaluation is essentially the same as our own Hale Aloha CLI. hale-aloha-cli-cycuc is a command-line interface that allows the user to communicate with a WattDepot server, providing the user with data concerning energy and power usage on the campus of the University of Hawaii at Manoa. WattDepot is the cross-platform service that gathers, stores, and then provides the aforementioned data to the user.

Team cycuc has their code repository on a Google Code site, just as Team Teams does. Likewise, Team cycuc uses Jenkins for the purposes of continuous integration. Continuous integration, contrary to popular belief, has nothing to do with finding the integral of some equation infinitely. In this context, continuous integration is a tool that automatically verifies the current state of a project to ensure that the system is always in working condition; if there is an error then developers are notified immediately so that the problem, whatever it may be, is at least recognized quickly if not resolved promptly. Team cycuc appears to have been using Issue Driven Project Management, just as Team Teams did as described here.

Because of this similarity in projects and the use of identical tools and technologies, the process of reviewing hale-aloha-cli-cycuc is much easier for Team Teams to accomplish. The text of our review is as follows:

Review Question 1: Does the system accomplish a useful task?

Below is a sample run of team cycuc’s system:

When we initially ran Team cycuc's .jar file there was a slight problem as it could not successfully run. Eventually one of cycuc's team members had updated the system for it to successfully run in console. For the most part, this system provides functionality as described in the assignment specifications. For example, the formatting of both the date and power / energy is not in the same as the sample output given.

This may be an issue for some people depending on how they plan to process the data given. Reporting the data given in units such as kilowatt may be more desired. In the case of the rank-towers command, no units appear next to the output given which may confuse those not familiar with the system. Also, it appears that for the commands daily-energy and energy-since reports the wrong units with respect to the data given. From personal experience with the getData() method, the data returned by this method must be converted correctly to M Wh. In this case, 549 kWh should be 0.549 M Wh.

For example, the formatting under the current system is as follows;

Some of the commands do not successfully return data at all, below is a sample of the code when the rank-towers command was executed.

Essentially the system attempts to implement the four commands listed in its help menu. The exact usefulness of this system is debatable as we deem this version of cycuc’s system not ready for distribution.

Review Question 2: Can an external user successfully install and use the system?

In addition to containing the files for hale-aloha-cli-cycuc, the project site provides a very general idea of what the project is and does. The home page has a brief description of the system and a picture that presumably provides an explanation for the group name. This does give viewers an idea of what the system does, but not a very clear concept. There is no User Guide page; instead is a page titled “PageName” that contains most of the information that the User Guide should. The exception though is how to execute the system, which is not covered. The distribution file in the Downloads section does include a working version of the system along with an executable .jar file. The version number is included in the distribution folder name, allowing users and developers to distinguish between different versions. These version numbers include the timestamp corresponding to the time at which the distribution was created, thus letting users and developers compare versions chronologically. The numbers that actually indicate major and minor versions appear to have remained at 1.0 since the first downloads became available.

The tests of the system are shown below:

Valid Input:

Invalid Input:

Review Question 3: Can an external developer successfully understand and enhance the system?

The Developers’ Guide wiki page on the cycuc project site provides clear instructions on how to build the system in Ant. The guide also includes information on the automated quality assurance tools used on the project. Specific information about those tools is not given, but developers are informed that the verify task will run all of the automated quality assurance tools. A link to the formatting guidelines serves to document the stylistic rules that the code is to follow. The Developers’ Guide does not mention Issue Driven Project Management or Continuous Integration. Similarly, instructions on how to generate JavaDoc documentation are not available, though the documentation does appear to come with the project in /doc.

JavaDoc documentation, as mentioned above, comes with the project in /doc. However, developers may still generate JavaDoc files through Ant or Eclipse. The JavaDoc documentation itself tends to be well-written, though there are some questionable points and the description is somewhat sparse. Several methods lack descriptions in their JavaDoc documentation. There are a few contradictions within the documentation, as in CurrentPower.java where the description for the printResults method (line 28) indicates that the text printed is based on days[0] while the parameter tag for days (line 31) states that days is ignored. However, the JavaDoc documentation did show the organization of the system, and the names of the various components were well matched with their actual purposes. The system does appear to have been designed to implement information hiding, with the Command interface serving as an example.

The cycuc system builds without errors in most cases. A timeout while attempting to access the server will cause the entire build process to stop, which accounts for the instances in which the build fails. Aside from timeouts, the system builds properly.

The data that Jacoco provides concerning test coverage does induce some slight concerns about the validity of the testing. The halealohacli package has no testing at all. Testing on the halealohacli.processor package covers 67% of the code and 58% of the possible branches. For halealohacli.command, 94% of the code was executed in testing, while 59% of the branches were taken. (These values seem to vary upon repeated testing; this may be due to the aforementioned timeouts.) These low values for branch coverage in particular may stem from a lack of testing for invalid input. As a result, none of the exceptions are checked. The tests indicate that parts of the system work for a particular input; however, as there is only one test per test class (with the exception of TestProcessor) it is difficult to be certain that the system does behave correctly. Thus, the existing testing will not necessarily stop new developers from breaking the system; the testing ensures that developers cannot treat valid input incorrectly, but does nothing to stop invalid input from causing problems.

With regard to coding standards, there exist several minor deviations from the standards that do not affect the readability of the code. The amount of comments varies: at times, there is a comment explaining every line of code, while at other points there are entire blocks of code without any documentation. The deviations from the coding standards are provided below:

Overall though, the code is readable; admittedly, the person testing the code had already implemented the project for a separate group and thus might be familiar with the objectives of the code, which would affect the results and opinions of the tester.

Looking through the Issues page associated with this project, it is clear what parts of the system were worked on by each developer. This team utilized a variety of status options available to better inform an external developer what worked and what didn’t work with respect to project progression. In some cases, clarification in the form of comments show the decision making process this team used when dealing with issues. Since each issue described clearly explains what the task was, it should be easy for an external developer to determine which developer would be the best person to collaborate with. In terms of work input from all of the developers, it appears that some team members did more than others.

Turning to the CI server associated with this project, it appears all build failures were corrected promptly with a maximum latency of roughly 30 minutes. Also, looking through each successful build, this team showed that they were working on this project in a consistent fashion where at least 9 out of 10 commits associated with an appropriate Issue.

The Three Prime Directives are as follows:

1: The system successfully accomplishes a useful task.

2: An external user can successfully install and use the system.

3: An external developer can successfully understand and enhance the system.

At least intuitively, this seems to provide a good framework for evaluating the system. The Three Prime Directives, if followed, ensure that the system actually does something and account for everyone who could potentially have reason to interact with the system.

The system under evaluation is essentially the same as our own Hale Aloha CLI. hale-aloha-cli-cycuc is a command-line interface that allows the user to communicate with a WattDepot server, providing the user with data concerning energy and power usage on the campus of the University of Hawaii at Manoa. WattDepot is the cross-platform service that gathers, stores, and then provides the aforementioned data to the user.

Team cycuc has their code repository on a Google Code site, just as Team Teams does. Likewise, Team cycuc uses Jenkins for the purposes of continuous integration. Continuous integration, contrary to popular belief, has nothing to do with finding the integral of some equation infinitely. In this context, continuous integration is a tool that automatically verifies the current state of a project to ensure that the system is always in working condition; if there is an error then developers are notified immediately so that the problem, whatever it may be, is at least recognized quickly if not resolved promptly. Team cycuc appears to have been using Issue Driven Project Management, just as Team Teams did as described here.

Because of this similarity in projects and the use of identical tools and technologies, the process of reviewing hale-aloha-cli-cycuc is much easier for Team Teams to accomplish. The text of our review is as follows:

Review Question 1: Does the system accomplish a useful task?

Below is a sample run of team cycuc’s system:

When we initially ran Team cycuc's .jar file there was a slight problem as it could not successfully run. Eventually one of cycuc's team members had updated the system for it to successfully run in console. For the most part, this system provides functionality as described in the assignment specifications. For example, the formatting of both the date and power / energy is not in the same as the sample output given.

This may be an issue for some people depending on how they plan to process the data given. Reporting the data given in units such as kilowatt may be more desired. In the case of the rank-towers command, no units appear next to the output given which may confuse those not familiar with the system. Also, it appears that for the commands daily-energy and energy-since reports the wrong units with respect to the data given. From personal experience with the getData() method, the data returned by this method must be converted correctly to M Wh. In this case, 549 kWh should be 0.549 M Wh.

For example, the formatting under the current system is as follows;

Some of the commands do not successfully return data at all, below is a sample of the code when the rank-towers command was executed.

Essentially the system attempts to implement the four commands listed in its help menu. The exact usefulness of this system is debatable as we deem this version of cycuc’s system not ready for distribution.

Review Question 2: Can an external user successfully install and use the system?

In addition to containing the files for hale-aloha-cli-cycuc, the project site provides a very general idea of what the project is and does. The home page has a brief description of the system and a picture that presumably provides an explanation for the group name. This does give viewers an idea of what the system does, but not a very clear concept. There is no User Guide page; instead is a page titled “PageName” that contains most of the information that the User Guide should. The exception though is how to execute the system, which is not covered. The distribution file in the Downloads section does include a working version of the system along with an executable .jar file. The version number is included in the distribution folder name, allowing users and developers to distinguish between different versions. These version numbers include the timestamp corresponding to the time at which the distribution was created, thus letting users and developers compare versions chronologically. The numbers that actually indicate major and minor versions appear to have remained at 1.0 since the first downloads became available.

The tests of the system are shown below:

Valid Input:

Invalid Input:

Review Question 3: Can an external developer successfully understand and enhance the system?

The Developers’ Guide wiki page on the cycuc project site provides clear instructions on how to build the system in Ant. The guide also includes information on the automated quality assurance tools used on the project. Specific information about those tools is not given, but developers are informed that the verify task will run all of the automated quality assurance tools. A link to the formatting guidelines serves to document the stylistic rules that the code is to follow. The Developers’ Guide does not mention Issue Driven Project Management or Continuous Integration. Similarly, instructions on how to generate JavaDoc documentation are not available, though the documentation does appear to come with the project in /doc.

JavaDoc documentation, as mentioned above, comes with the project in /doc. However, developers may still generate JavaDoc files through Ant or Eclipse. The JavaDoc documentation itself tends to be well-written, though there are some questionable points and the description is somewhat sparse. Several methods lack descriptions in their JavaDoc documentation. There are a few contradictions within the documentation, as in CurrentPower.java where the description for the printResults method (line 28) indicates that the text printed is based on days[0] while the parameter tag for days (line 31) states that days is ignored. However, the JavaDoc documentation did show the organization of the system, and the names of the various components were well matched with their actual purposes. The system does appear to have been designed to implement information hiding, with the Command interface serving as an example.

The cycuc system builds without errors in most cases. A timeout while attempting to access the server will cause the entire build process to stop, which accounts for the instances in which the build fails. Aside from timeouts, the system builds properly.

The data that Jacoco provides concerning test coverage does induce some slight concerns about the validity of the testing. The halealohacli package has no testing at all. Testing on the halealohacli.processor package covers 67% of the code and 58% of the possible branches. For halealohacli.command, 94% of the code was executed in testing, while 59% of the branches were taken. (These values seem to vary upon repeated testing; this may be due to the aforementioned timeouts.) These low values for branch coverage in particular may stem from a lack of testing for invalid input. As a result, none of the exceptions are checked. The tests indicate that parts of the system work for a particular input; however, as there is only one test per test class (with the exception of TestProcessor) it is difficult to be certain that the system does behave correctly. Thus, the existing testing will not necessarily stop new developers from breaking the system; the testing ensures that developers cannot treat valid input incorrectly, but does nothing to stop invalid input from causing problems.

With regard to coding standards, there exist several minor deviations from the standards that do not affect the readability of the code. The amount of comments varies: at times, there is a comment explaining every line of code, while at other points there are entire blocks of code without any documentation. The deviations from the coding standards are provided below:

Overall though, the code is readable; admittedly, the person testing the code had already implemented the project for a separate group and thus might be familiar with the objectives of the code, which would affect the results and opinions of the tester.

Looking through the Issues page associated with this project, it is clear what parts of the system were worked on by each developer. This team utilized a variety of status options available to better inform an external developer what worked and what didn’t work with respect to project progression. In some cases, clarification in the form of comments show the decision making process this team used when dealing with issues. Since each issue described clearly explains what the task was, it should be easy for an external developer to determine which developer would be the best person to collaborate with. In terms of work input from all of the developers, it appears that some team members did more than others.

Turning to the CI server associated with this project, it appears all build failures were corrected promptly with a maximum latency of roughly 30 minutes. Also, looking through each successful build, this team showed that they were working on this project in a consistent fashion where at least 9 out of 10 commits associated with an appropriate Issue.

Monday, November 28, 2011

Hale Aloha Command Line Interface

Hale Aloha CLI is an interface allowing users to view information concerning energy and power consumption in the Hale Aloha residences on the University of Hawaii at Manoa campus. For this project, I worked with Jayson Gamiao and Jason Yeo in a test of Issue Driven Project Management. In addition, we experimented with a small number of technologies and tools such as Jenkins and Google Code.

IDPM unsurprisingly deals with project management, focusing on keeping project members working on issues that continue progress on the project. In theory, it might sound like micromanaging the project; in practice it seems to work much better. In IDPM, each project member receives small tasks to complete. Each project member should always have at least one task to work on. These tasks, being small, should take only a couple of days to complete. This necessitates frequent meetings so that developers have a constant supply of tasks to work on.

In practice, the group followed these guidelines fairly closely. All three group members were constantly and continuously working on the project from about 15 November, the date of our second group meeting in which we discussed how to work on the project. The exceptions to this were from 18 to 20 November, during which I was ill, 21 to 23 November, during which the WattDepot server used in the project was nonfunctional and was unable to provide us with data, and 24 November, during which we had to wait for data to accumulate after a server reset. The tasks (referred to as “issues” in the project site) could have been smaller; many of the tasks took longer than two days to complete. On the other hand, one could also argue that the tasks only seem to have taken more than two days, as some group members were reluctant to label tasks as “Done” until their code for those tasks had received extensive testing and peer review. In addition, as this is the first time any of us have worked with IDPM, there are naturally many mistakes and omissions as we forgot to refer to particular issues in our commit logs.

After my comments concerning testing for the Robocode project (essentially, that it would be better to incorporate testing early in the development process), this project allowed me to experience what such a project was like. Throughout this project, any code that I wrote was almost immediately accompanied with a JUnit test. This was never a tremendous annoyance; rather, the testing allowed me to verify my work long before any real manual testing was possible through the command-line interface.

As for the project itself, the user is provided with a few commands:

• current-power [source]: Prints the current power consumption of the source.

• daily-energy [source] [date]: Prints the amount of energy that the source consumed on the given date.

• energy-since [source] [date]: Prints the amount of energy that the source has consumed from the given date to the present.

• rank-towers [date] [date]: Prints the Hale Aloha towers in order of energy consumption between the dates given.

• help: Prints a list of commands that the user may type in.

• quit: Exits the interface.

Everything works as intended. There are a few features that we had hoped to implement but lacked the time to work on. In particular, we had considered the use of reflection to ease the continued expansion of this interface. This unfortunately never came about.

Much more detailed information concerning this project is available at the project site.

IDPM unsurprisingly deals with project management, focusing on keeping project members working on issues that continue progress on the project. In theory, it might sound like micromanaging the project; in practice it seems to work much better. In IDPM, each project member receives small tasks to complete. Each project member should always have at least one task to work on. These tasks, being small, should take only a couple of days to complete. This necessitates frequent meetings so that developers have a constant supply of tasks to work on.

In practice, the group followed these guidelines fairly closely. All three group members were constantly and continuously working on the project from about 15 November, the date of our second group meeting in which we discussed how to work on the project. The exceptions to this were from 18 to 20 November, during which I was ill, 21 to 23 November, during which the WattDepot server used in the project was nonfunctional and was unable to provide us with data, and 24 November, during which we had to wait for data to accumulate after a server reset. The tasks (referred to as “issues” in the project site) could have been smaller; many of the tasks took longer than two days to complete. On the other hand, one could also argue that the tasks only seem to have taken more than two days, as some group members were reluctant to label tasks as “Done” until their code for those tasks had received extensive testing and peer review. In addition, as this is the first time any of us have worked with IDPM, there are naturally many mistakes and omissions as we forgot to refer to particular issues in our commit logs.

After my comments concerning testing for the Robocode project (essentially, that it would be better to incorporate testing early in the development process), this project allowed me to experience what such a project was like. Throughout this project, any code that I wrote was almost immediately accompanied with a JUnit test. This was never a tremendous annoyance; rather, the testing allowed me to verify my work long before any real manual testing was possible through the command-line interface.

As for the project itself, the user is provided with a few commands:

• current-power [source]: Prints the current power consumption of the source.

• daily-energy [source] [date]: Prints the amount of energy that the source consumed on the given date.

• energy-since [source] [date]: Prints the amount of energy that the source has consumed from the given date to the present.

• rank-towers [date] [date]: Prints the Hale Aloha towers in order of energy consumption between the dates given.

• help: Prints a list of commands that the user may type in.

• quit: Exits the interface.

Everything works as intended. There are a few features that we had hoped to implement but lacked the time to work on. In particular, we had considered the use of reflection to ease the continued expansion of this interface. This unfortunately never came about.

Much more detailed information concerning this project is available at the project site.

Tuesday, November 8, 2011

WattDepot

In keeping with the topic of energy, I have begun working with WattDepot. Though better explained on the project page for WattDepot, the basic information is that WattDepot provides the capability to retrieve energy data from sensors. Though WattDepot clients may be written in many languages, I have continued to use Java as my language of choice. (At least for programming; English is slightly better suited to entries such as this.)

As with a few previous systems, I have completed a set of simple katas to help in acquainting myself with WattDepot. These are as follows:

1: SourceListing: Connects to a WattDepot server and prints all sources on the server with their descriptions.

2: SourceLatency: Connects to a WattDepot server and prints all sources on the server with their latencies, where “latency” is defined as the time elapsed since the last measurement was taken.

3: SourceHierarchy: Connects to a WattDepot server and prints the hierarchy of the sources. This is possible through the use of virtual sources, which combine the input of multiple physical sources to produce a result that may often be more useful for real-world analysis.

4: EnergyYesterday: Connects to a WattDepot server and prints all sources on the server with the total amount of energy each source consumed on the previous date, sorted in ascending order by energy consumption.

5: HighestRecordedPowerYesterday: Connects to a WattDepot server and prints all sources on the server, sorted in ascending order based on highest recorded data for the source.

6: MondayAverageEnergy: Connects to a WattDepot server and prints all sources on the server with the average energy consumption on the past two Mondays for each source.

To start from the beginning then, SourceListing was a moderately difficult exercise. This difficulty is primarily the result of being unfamiliar with a new system. Once I knew what resources I had, actually completing the kata was not terribly difficult. This first kata took approximately an hour to complete.

Next was SourceLatency. However, SourceLatency is practically the same as SourceListing, with only the difference of printing latency instead of descriptions. As a result, the code is essentially SourceListing with a few additions to make it print the latency of all sources. This made SourceLatency perhaps one of the easiest katas, and it was completed well within forty-five minutes, even accounting for time spent going back in to remove various Checkstyle, PMD, and FindBugs errors.

In contrast, SourceHierarchy was the most complex of the katas, and it shows in that it took at least ten hours of programming alone, more than all the other katas combined. A fair amount of this difficulty was due to the way that Source.getSubSources was implemented: since the method returns a String rather than a list of Source instances, some String manipulation is necessary. I somewhat suspect that there is another, better way of working with the subsources, but I have yet to find it.

I also had to learn how the hierarchy worked, and I am still not entirely certain on some points about the hierarchy. For example, if B is a subsource of A and C is a subsource of B, then by transitivity we can deduce that C is a subsource of A. However, I do not know if the getSubSources method would account for that or if it would only return those sources that are immediate subsources.

My solution to printing the hierarchy involved a recursive method. This method keeps track of the “depth” that the current source is at; that is, how many levels of subsourcing lie between the current source and some root. I am not quite certain how I got the idea of using recursion, but by last Thursday I was already thinking of it even though I would barely have been working on it at that time. Although I am rather proud of the method and of thinking of it myself, I am not certain how efficient it is compared to a more linear algorithm. Then again, the recursive certainly seems a lot better than any of my other plans, which would have involved messy processes requiring multiple arrays to store sensor data and hierarchy depth.

After that intensive process for SourceHierarchy, I then moved on to EnergyYesterday. EnergyYesterday required that the list of sources be sorted based on the energy consumption of the sources, so some sorting algorithm was necessary; I chose merge sort, and thus a variant of it appears in my code. This kata was also where I began to notice the problems with having to deal with a server. If I constantly made calls to the server for sensor data, the chances of some error occurring would increase, and so my code only asks for data about the sensors once. The data is then stored in a String, actually the very same one that would be used for output. Though not as intensive as SourceHierarchy, EnergyYesterday still took about two hours to complete.

One bit of code that I am still annoyed with also started in EnergyYesterday, though I did not truly realize the problem until getting to MondayAverageEnergy. In order to calculate the energy consumed in the previous day, it is necessary to know what and when the previous day is. Since WattDepot uses the XMLGregorianCalendar class, I decided to do the same. Unfortunately, as far as I can tell the XMLGregorianCalendar class does not automatically fix its values to avoid invalid situations. That is, if “yesterday” is in a different month, then the days would have to wrap around and the month would have to be decremented. While understandable, it also results in a large portion of my code in the last three katas attempting to resolve those sorts of issues and prevent them from causing problems.

While SourceHierarchy was the most complex kata, HighestRecordedPowerYesterday was possibly the most frustrating. My original plan had been to test at intervals of fifteen minutes; however, this took up far too much time and the connection to the WattDepot server would invariably time out. Even increasing this to intervals of an hour did not help in all cases. It is at times like these that I wonder if the name is really “What Depot?” insofar as the server never seems to be working when it has to. The solution that I implemented placed a try-catch statement to handle the cases that did time out through making a new connection to the server and moving on. This is perhaps not ideal, but it works, and the user does have a message informing him or her why about half of the sources do not have a valid power level. It might have been a good idea to shift some variables around so that the list gets whatever was the highest power consumption and time for that source before losing the connection. Another issue, though less significant, was the formatting. It was necessary to print out the times at which the highest levels of power consumption were; however, the format methods were uncooperative and would refuse to accept certain conversion characters. This also led to a brute force solution where I simply took care of that formatting myself instead of using the format method. In the end, HighestRecordedPowerYesterday took about two and a half hours to finish.

Finally, MondayAverageEnergy was very similar to EnergyYesterday. The process of gathering data was almost exactly the same, the differences being that it was necessary to gather data from two separate days and that it was necessary to extend back even further than just a single day to find data. This helped find some of the problems in EnergyYesterday: while it is quite easy to miss the wraparound error described above when you only have to move back a single day, missing the same error when one must look through up to fourteen days in the past becomes quite difficult. Unfortunately, also as described above, the solution that I found is rather awkward. MondayAverageEnergy took a little over an hour to finish.

Despite all the difficulties, the programming and problem-solving processes themselves were quite enjoyable. There are certainly some issues in my solutions, and working on some of the code past midnight historically has a negative effect on the quality of my work. However, the additional experience with Java and Ant is in and of itself valuable; the fact that this work has some practical value makes it even more important.

As with a few previous systems, I have completed a set of simple katas to help in acquainting myself with WattDepot. These are as follows:

1: SourceListing: Connects to a WattDepot server and prints all sources on the server with their descriptions.

2: SourceLatency: Connects to a WattDepot server and prints all sources on the server with their latencies, where “latency” is defined as the time elapsed since the last measurement was taken.

3: SourceHierarchy: Connects to a WattDepot server and prints the hierarchy of the sources. This is possible through the use of virtual sources, which combine the input of multiple physical sources to produce a result that may often be more useful for real-world analysis.

4: EnergyYesterday: Connects to a WattDepot server and prints all sources on the server with the total amount of energy each source consumed on the previous date, sorted in ascending order by energy consumption.

5: HighestRecordedPowerYesterday: Connects to a WattDepot server and prints all sources on the server, sorted in ascending order based on highest recorded data for the source.

6: MondayAverageEnergy: Connects to a WattDepot server and prints all sources on the server with the average energy consumption on the past two Mondays for each source.

To start from the beginning then, SourceListing was a moderately difficult exercise. This difficulty is primarily the result of being unfamiliar with a new system. Once I knew what resources I had, actually completing the kata was not terribly difficult. This first kata took approximately an hour to complete.

Next was SourceLatency. However, SourceLatency is practically the same as SourceListing, with only the difference of printing latency instead of descriptions. As a result, the code is essentially SourceListing with a few additions to make it print the latency of all sources. This made SourceLatency perhaps one of the easiest katas, and it was completed well within forty-five minutes, even accounting for time spent going back in to remove various Checkstyle, PMD, and FindBugs errors.

In contrast, SourceHierarchy was the most complex of the katas, and it shows in that it took at least ten hours of programming alone, more than all the other katas combined. A fair amount of this difficulty was due to the way that Source.getSubSources was implemented: since the method returns a String rather than a list of Source instances, some String manipulation is necessary. I somewhat suspect that there is another, better way of working with the subsources, but I have yet to find it.

I also had to learn how the hierarchy worked, and I am still not entirely certain on some points about the hierarchy. For example, if B is a subsource of A and C is a subsource of B, then by transitivity we can deduce that C is a subsource of A. However, I do not know if the getSubSources method would account for that or if it would only return those sources that are immediate subsources.

My solution to printing the hierarchy involved a recursive method. This method keeps track of the “depth” that the current source is at; that is, how many levels of subsourcing lie between the current source and some root. I am not quite certain how I got the idea of using recursion, but by last Thursday I was already thinking of it even though I would barely have been working on it at that time. Although I am rather proud of the method and of thinking of it myself, I am not certain how efficient it is compared to a more linear algorithm. Then again, the recursive certainly seems a lot better than any of my other plans, which would have involved messy processes requiring multiple arrays to store sensor data and hierarchy depth.

After that intensive process for SourceHierarchy, I then moved on to EnergyYesterday. EnergyYesterday required that the list of sources be sorted based on the energy consumption of the sources, so some sorting algorithm was necessary; I chose merge sort, and thus a variant of it appears in my code. This kata was also where I began to notice the problems with having to deal with a server. If I constantly made calls to the server for sensor data, the chances of some error occurring would increase, and so my code only asks for data about the sensors once. The data is then stored in a String, actually the very same one that would be used for output. Though not as intensive as SourceHierarchy, EnergyYesterday still took about two hours to complete.

One bit of code that I am still annoyed with also started in EnergyYesterday, though I did not truly realize the problem until getting to MondayAverageEnergy. In order to calculate the energy consumed in the previous day, it is necessary to know what and when the previous day is. Since WattDepot uses the XMLGregorianCalendar class, I decided to do the same. Unfortunately, as far as I can tell the XMLGregorianCalendar class does not automatically fix its values to avoid invalid situations. That is, if “yesterday” is in a different month, then the days would have to wrap around and the month would have to be decremented. While understandable, it also results in a large portion of my code in the last three katas attempting to resolve those sorts of issues and prevent them from causing problems.

While SourceHierarchy was the most complex kata, HighestRecordedPowerYesterday was possibly the most frustrating. My original plan had been to test at intervals of fifteen minutes; however, this took up far too much time and the connection to the WattDepot server would invariably time out. Even increasing this to intervals of an hour did not help in all cases. It is at times like these that I wonder if the name is really “What Depot?” insofar as the server never seems to be working when it has to. The solution that I implemented placed a try-catch statement to handle the cases that did time out through making a new connection to the server and moving on. This is perhaps not ideal, but it works, and the user does have a message informing him or her why about half of the sources do not have a valid power level. It might have been a good idea to shift some variables around so that the list gets whatever was the highest power consumption and time for that source before losing the connection. Another issue, though less significant, was the formatting. It was necessary to print out the times at which the highest levels of power consumption were; however, the format methods were uncooperative and would refuse to accept certain conversion characters. This also led to a brute force solution where I simply took care of that formatting myself instead of using the format method. In the end, HighestRecordedPowerYesterday took about two and a half hours to finish.

Finally, MondayAverageEnergy was very similar to EnergyYesterday. The process of gathering data was almost exactly the same, the differences being that it was necessary to gather data from two separate days and that it was necessary to extend back even further than just a single day to find data. This helped find some of the problems in EnergyYesterday: while it is quite easy to miss the wraparound error described above when you only have to move back a single day, missing the same error when one must look through up to fourteen days in the past becomes quite difficult. Unfortunately, also as described above, the solution that I found is rather awkward. MondayAverageEnergy took a little over an hour to finish.

Despite all the difficulties, the programming and problem-solving processes themselves were quite enjoyable. There are certainly some issues in my solutions, and working on some of the code past midnight historically has a negative effect on the quality of my work. However, the additional experience with Java and Ant is in and of itself valuable; the fact that this work has some practical value makes it even more important.

Monday, October 31, 2011

Energy Development in Hawaii

Modern society depends greatly on energy. Cars need gasoline to go anywhere (aside from downhill). Cell phones need batteries to operate. Software engineers need electricity to power their computers so that they can write blog entries. While this dependency upon energy is not necessarily a bad thing—cars, cell phones, and software engineers certainly offer their conveniences—this relationship between society and energy does create the potential for problems. Hawaii, as a very unique place, has unique strengths and weaknesses in working with energy.

At first glance, Hawaii would seem to have several severe disadvantages when considering energy needs. Being an archipelago rather far away from any typical sources of energy does make certain options unviable. Oil, which is what many people automatically think of when asked about energy sources, is not naturally found in Hawaii and must be imported. The same is true of many other raw materials used in energy production. Since these materials must be imported, they are much more expensive in Hawaii than in other locations. The fact that Hawaii is comprised of a number of islands also makes them divided, unable to easily share what energy they can produce with one another. These islands are also different from one another in many respects, such that a solution that works on one island may be unworkable on another.

There also exist concerns about the usage of land on which energy development might take place. As with most land issues, there are those who own the land, those who think they own the land, and those who know that they do not own the land but want everyone to think that they do. Across all of these situations though, there is the need to carefully consider a wide range of factors when constructing facilities for energy production. The islands of Hawaii have a very limited amount of land for development, so any plans to use the land must be well thought out. Residents may not want to live near a power plant, or fertile land may be better used for agriculture.

On the other hand, the Hawaiian Islands also provide certain unique benefits and opportunities in regards to energy development. Hawaii offers a great variety of renewable resources. While Hawaii does not always fit the tourist ideal of bright sunny skies, solar energy seems to be a common choice for alternative energy, especially for residential areas. Geothermal energy is also a possibility at the State or county level, though trying to use it for individual houses would be questionable (though as with anything involving heat and particularly magma, it would certainly be interesting; a moat of lava would probably be effective at keeping trick-or-treaters away).

Even the negative characteristics of Hawaii in terms of energy development can be turned into positives given the right frame. The current cost of energy provides an incentive to find some cheaper sources of energy, and the cost of those alternatives compared to those of more conventional methods certainly seems much more appealing than it might otherwise. The limited, isolated nature of the Hawaiian Islands can also serve as a positive factor in that the energy needs are also relatively limited.

Energy production in Hawaii offers several opportunities for software engineers to participate in research. Researchers will often benefit from having tools that can analyze the data that they obtain. The data itself is just numbers; software engineers can present that data in a meaningful form not only to the researchers but to the participants and even to the general public. Software engineers can also help in providing quality assurance tools to verify the data that the researchers gather. With the amount of money invested in this sort of research, ensuring that the results are valid becomes extremely important. On a larger scale though, most of the research on energy focuses on hardware solutions: building a particular power plant, or monitoring fluctuations in energy use in different locations. Software engineers can add a software-based perspective to solving energy problems. For example, given the problem of the lights in houses being left on throughout the night, a hardware solution might involve light bulbs that are energy-efficient and circuits that automatically shut off after a certain amount of time. While this works, the hardware solution is also rather inflexible—if the user wants to keep the lights on, he or she would have to manually turn the lights back on each time the lights automatically turned off. In contrast, a software solution might involve a program allowing the user to select how long to leave the lights on before automatically turning off, with the ability to make exceptions in special cases or account for variables, perhaps giving warnings at intervals before the lights are automatically turned off.

At first glance, Hawaii would seem to have several severe disadvantages when considering energy needs. Being an archipelago rather far away from any typical sources of energy does make certain options unviable. Oil, which is what many people automatically think of when asked about energy sources, is not naturally found in Hawaii and must be imported. The same is true of many other raw materials used in energy production. Since these materials must be imported, they are much more expensive in Hawaii than in other locations. The fact that Hawaii is comprised of a number of islands also makes them divided, unable to easily share what energy they can produce with one another. These islands are also different from one another in many respects, such that a solution that works on one island may be unworkable on another.

There also exist concerns about the usage of land on which energy development might take place. As with most land issues, there are those who own the land, those who think they own the land, and those who know that they do not own the land but want everyone to think that they do. Across all of these situations though, there is the need to carefully consider a wide range of factors when constructing facilities for energy production. The islands of Hawaii have a very limited amount of land for development, so any plans to use the land must be well thought out. Residents may not want to live near a power plant, or fertile land may be better used for agriculture.

On the other hand, the Hawaiian Islands also provide certain unique benefits and opportunities in regards to energy development. Hawaii offers a great variety of renewable resources. While Hawaii does not always fit the tourist ideal of bright sunny skies, solar energy seems to be a common choice for alternative energy, especially for residential areas. Geothermal energy is also a possibility at the State or county level, though trying to use it for individual houses would be questionable (though as with anything involving heat and particularly magma, it would certainly be interesting; a moat of lava would probably be effective at keeping trick-or-treaters away).

Even the negative characteristics of Hawaii in terms of energy development can be turned into positives given the right frame. The current cost of energy provides an incentive to find some cheaper sources of energy, and the cost of those alternatives compared to those of more conventional methods certainly seems much more appealing than it might otherwise. The limited, isolated nature of the Hawaiian Islands can also serve as a positive factor in that the energy needs are also relatively limited.

Energy production in Hawaii offers several opportunities for software engineers to participate in research. Researchers will often benefit from having tools that can analyze the data that they obtain. The data itself is just numbers; software engineers can present that data in a meaningful form not only to the researchers but to the participants and even to the general public. Software engineers can also help in providing quality assurance tools to verify the data that the researchers gather. With the amount of money invested in this sort of research, ensuring that the results are valid becomes extremely important. On a larger scale though, most of the research on energy focuses on hardware solutions: building a particular power plant, or monitoring fluctuations in energy use in different locations. Software engineers can add a software-based perspective to solving energy problems. For example, given the problem of the lights in houses being left on throughout the night, a hardware solution might involve light bulbs that are energy-efficient and circuits that automatically shut off after a certain amount of time. While this works, the hardware solution is also rather inflexible—if the user wants to keep the lights on, he or she would have to manually turn the lights back on each time the lights automatically turned off. In contrast, a software solution might involve a program allowing the user to select how long to leave the lights on before automatically turning off, with the ability to make exceptions in special cases or account for variables, perhaps giving warnings at intervals before the lights are automatically turned off.

Friday, October 21, 2011

Reflections on Software Engineering

Over the past few months, I have been learning a great deal about various tools for use in software engineering as well as the principles behind their use. Of course, the actual use of these tools tends to take precedence in most of these blog entries since that tends to be the more exciting topic. Actually doing something is more glamorous than sitting around discussing theory. However, every once in a while there is the desire to relax and look back on these general principles. This is particularly true for students, who can use the study as a means of procrastinating on other assignments while still doing something academically related.

For these reasons, I have started reflecting on what I have done over these months and have found some questions that I have learned to answer through the work I have done.

1: How does an IDE differ from a build system?

An IDE allows developers to write, compile, and test code on their own systems. Build systems provide standardized compilation, testing, packaging, and automated quality assurance that works across platforms and IDEs. IDEs are suited for individual developers; build systems allow for efficient collaboration between project members. All functions of a build system should be IDE-independent.

2: What is the difference between using == and equals to test equality?

When used with objects, == tests object identity, while the equals method tests object value. As a result, == should be used to check if objects are the same—that is, if one object literally is the other—while equals should be used to determine if two objects have the same value.

3: In what order will Ant resolve dependencies?

Ant will not run a target until all dependencies for that target have been resolved. As a result, the target order will be like a stack in which the first targets are the last to be executed.

4: Does 100% coverage from a white-box test mean that the code is free of bugs?

No; 100% coverage only indicates that the test or tests went through every line of code. This result does not mean that there were no errors in the operation of the application.

5: If the trunk of an SVN project is always supposed to be a working version of the project, why is it necessary to run the “verify” target upon checkout of an updated version?

Running the “verify” target ensures that the updated version does in fact work. The fact that the trunk should always be a working version does not necessarily mean that it will always be a working version. If this initial use of “verify” does not produce any errors, then any errors that are produced after edits to the code must be due to those edits, making resolving those problems easier. However, if the trunk does not pass the “verify” target, then the trunk should be fixed before development can progress further.

For these reasons, I have started reflecting on what I have done over these months and have found some questions that I have learned to answer through the work I have done.

1: How does an IDE differ from a build system?

An IDE allows developers to write, compile, and test code on their own systems. Build systems provide standardized compilation, testing, packaging, and automated quality assurance that works across platforms and IDEs. IDEs are suited for individual developers; build systems allow for efficient collaboration between project members. All functions of a build system should be IDE-independent.

2: What is the difference between using == and equals to test equality?

When used with objects, == tests object identity, while the equals method tests object value. As a result, == should be used to check if objects are the same—that is, if one object literally is the other—while equals should be used to determine if two objects have the same value.

3: In what order will Ant resolve dependencies?

Ant will not run a target until all dependencies for that target have been resolved. As a result, the target order will be like a stack in which the first targets are the last to be executed.

4: Does 100% coverage from a white-box test mean that the code is free of bugs?

No; 100% coverage only indicates that the test or tests went through every line of code. This result does not mean that there were no errors in the operation of the application.

5: If the trunk of an SVN project is always supposed to be a working version of the project, why is it necessary to run the “verify” target upon checkout of an updated version?

Running the “verify” target ensures that the updated version does in fact work. The fact that the trunk should always be a working version does not necessarily mean that it will always be a working version. If this initial use of “verify” does not produce any errors, then any errors that are produced after edits to the code must be due to those edits, making resolving those problems easier. However, if the trunk does not pass the “verify” target, then the trunk should be fixed before development can progress further.

Wednesday, October 19, 2011

Configuration Management

Configuration management was relatively easy after the work done on the competitive Robocode robot. I was greatly aided in this in that I had previously used Google Project Hosting for another project, though my experiences with the service were somewhat mixed. Because I had no experience with SVN at that time, I was limited to editing the files that were already on the project page, which meant updating files one at a time. Obviously, this was a terribly inefficient method of working on the project. There were ultimately never any problems with the project not working—unless someone attempted to checkout the project while I was between updating files, no one would notice that the files were out of sync—but still, there was that risk, and there was also the danger that I might forget to update one or more files, resulting in a final product that did not work. On the positive side, having to update files one by one meant that I was recorded as making a lot of updates to the code, so someone taking a casual glance at the list of updates would assume that I was doing my fair share of the work.

I was successful in making updates to a sample project page and creating a project site for my own DeaconBlues Robocode robot. In doing this, I learned how to use Google Project Hosting and TortoiseSVN. There was a mild issue with TortoiseSVN in that the latest version does not support Windows XP with Service Pack 2, which is what my current system uses, so I am using version 1.6.16. However, at least for these early stages where I only have to checkout, update, and commit files, any loss in functionality is not noticeable.

Obviously, using SVN to commit changes to a project all at once is much easier than going through all of the files one by one. TortoiseSVN is a very convenient, clever system that allows me to easily commit changes. I remain more ambivalent about Google Project Hosting; though it certainly does what it is supposed to, I still think that being able to upload and especially to delete files through Google Project Hosting itself should be much easier than it is now. Although I can work around this with SVN (and doing so is in fact better since it allows me to run a verification check before committing the change), I think that since the files are on the project site, it should be possible to add to or delete from that set of files through the project site.

Overall though, this first experience with SVN and configuration management was positive. I can see how Google Project Hosting will be very useful for collaboration with others in the future.

I was successful in making updates to a sample project page and creating a project site for my own DeaconBlues Robocode robot. In doing this, I learned how to use Google Project Hosting and TortoiseSVN. There was a mild issue with TortoiseSVN in that the latest version does not support Windows XP with Service Pack 2, which is what my current system uses, so I am using version 1.6.16. However, at least for these early stages where I only have to checkout, update, and commit files, any loss in functionality is not noticeable.

Obviously, using SVN to commit changes to a project all at once is much easier than going through all of the files one by one. TortoiseSVN is a very convenient, clever system that allows me to easily commit changes. I remain more ambivalent about Google Project Hosting; though it certainly does what it is supposed to, I still think that being able to upload and especially to delete files through Google Project Hosting itself should be much easier than it is now. Although I can work around this with SVN (and doing so is in fact better since it allows me to run a verification check before committing the change), I think that since the files are on the project site, it should be possible to add to or delete from that set of files through the project site.

Overall though, this first experience with SVN and configuration management was positive. I can see how Google Project Hosting will be very useful for collaboration with others in the future.

Tuesday, October 11, 2011

Competitive Robocode Robot

Overview

In continuation of my work on the Robocode and Ant katas, I have produced a competitive robot called “DeaconBlues” (though represented as “deaconblues” in Robocode itself) for use in the Robocode system. I use “competitive” in the loosest possible sense, of course; this particular robot has difficulty even in defeating some of the sample robots. However, this robot does have the potential to succeed so long as circumstances are not too adverse.

My objective in this project was to create a robot that could reliably defeat the sample Walls robot; my understanding from my initial observations of Robocode was that Walls would be the most difficult to fight against. However, I spent most of the time from when I finished the Robocode katas until the present on developing a targeting system that would have been extremely valuable in any competitive robot. Of course, being able to track an enemy is useful for any target, not just against Walls. I actually did manage to implement my system a few days before this writing. It was fast, reasonably efficient, and only required knowledge of basic trigonometry to understand. It also did not work, which was a significant problem.

Fortunately, I also had a backup plan for a robot that focused primarily on defeating Walls. This robot is described below.

Design

Movement

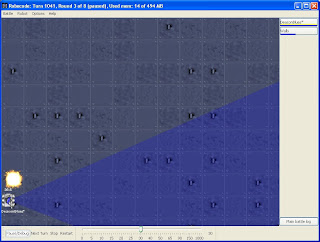

DeaconBlues moves in a pattern very similar to that of Walls in that it circles around the outer edge of the field. When fighting against robots that are not Walls, DeaconBlues and Walls are virtually identical in their movement. However, when DeaconBlues detects an enemy that appears to be using a Walls strategy, it determines whether the enemy is moving clockwise or counterclockwise around the field and then goes in the opposite direction. The hope is that doing so will result in a situation such as that in the picture below.

The sample Walls robot always tries to face toward the center of the field. This leaves its flanks vulnerable as it moves along the edges. As the picture shows, DeaconBlues is able to fire at Walls without fear of counterattack, even though Walls has nearly seventy more energy than DeaconBlues does. In fact, a few seconds later DeaconBlues was able to destroy Walls through a combination of ramming and shooting.

Targeting

Unfortunately, targeting is one of the weak points in DeaconBlues. Without the working targeting system that I was hoping to develop, DeaconBlues must simply fire upon seeing an enemy and hope that it is still there when the bullet arrives. DeaconBlues also falls a bit short in selecting targets, though this is not as great a problem if DeaconBlues only has to deal with a single opponent at a time. The one aspect of targeting that DeaconBlues is reasonably competent in is remembering the characteristics of its targets; the downside is that DeaconBlues must thus focus wholly on a single target or else invalidate all that information.

Firing

DeaconBlues is somewhat weak in terms of firing, for the same reasons as its weakness in targeting. However, DeaconBlues does have a formula to determine the strength of its shots, accounting for factors such as enemy velocity, heading, bearing, and distance. As mentioned in the Targeting section, DeaconBlues fires as soon as it sees its target. Aside from quite possibly missing a moving target, this makes it difficult to hit even stationary targets at times. This is because DeaconBlues fires as soon as its radar detects the target, which is in the moment when the radar touches the edge of the enemy. As a result, DeaconBlues is aiming for the edge, not the center of the enemy, and thus from difficult angles misses are much more common for DeaconBlues.

Results

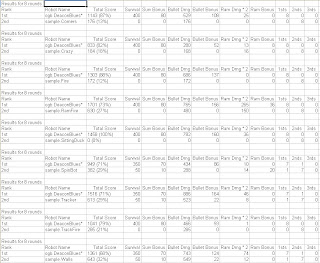

DeaconBlues can reliably beat any of the sample robots provided with Robocode. The results provided here may be slightly optimistic about the performance of DeaconBlues, as usually the scores are much closer. Nonetheless, DeaconBlues has an excellent record against the sample robots, with single defeats only to SpinBot, Tracker, and Walls.

SpinBot is difficult to fight against largely because of the circular motion, which makes aiming difficult. The same problem exists for Crazy, but SpinBot has a more regular pattern. Since DeaconBlues moves along the walls, if SpinBot is also near a wall that DeaconBlues must pass by there will inevitably be some shots exchanged.

Losing to Tracker was a bit of a surprise. My best guess as to what happened is that DeaconBlues was stuck in a corner at some point where Tracker should shoot at DeaconBlues while DeaconBlues was too focused on escaping to fight back.